Hannah Devinney, a doctoral candidate at Umeå University recently held a lecture on “Bias in Natural Language Processing (NLP)” for the Adlede team. In the lecture, Hannah focused on explaining gender bias in NLP and shared some tools with us regarding how this bias can be mitigated.

This is extremely important for Adlede because we advocate for fair and ethical advertising through contextual advertising and our contextual advertising processes heavily depend on NLP.

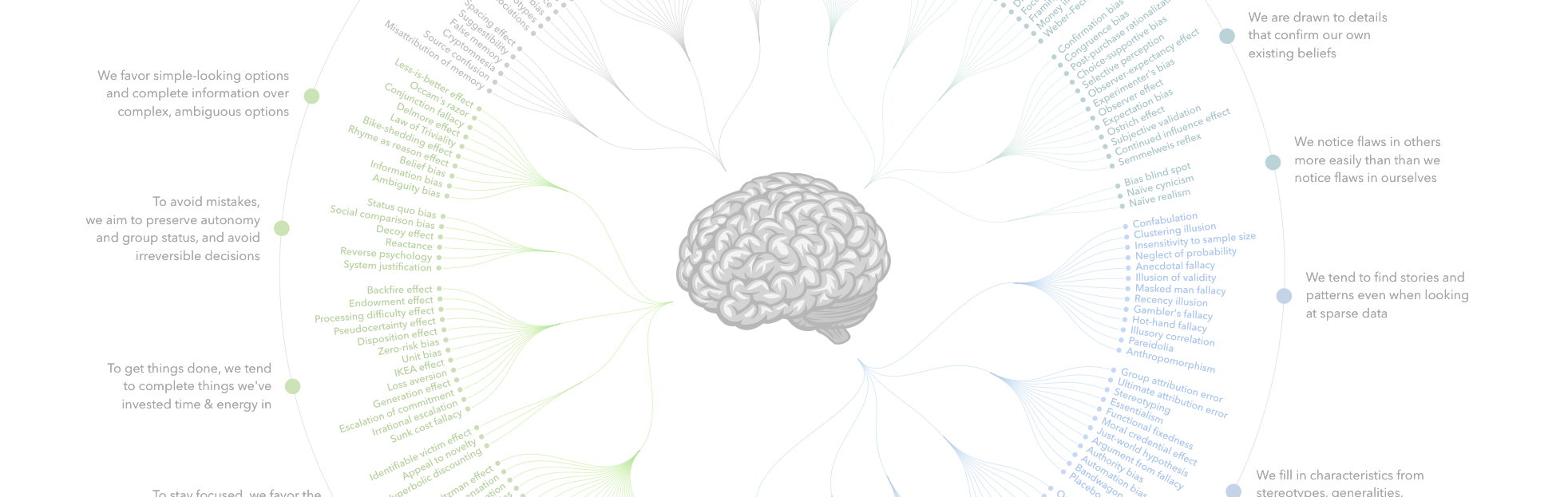

Image by John Manoogian III (Wikimedia)

Oftentimes NLP models are trained on data that has clear gender bias in it. Some examples of the bias may look like as such:

Such bias in NLP can lead to negative consequences. These assumptions render certain groups “unintelligible” and being unintelligible to society and computer systems can have violent consequences. For example: Such bias erase trans, nonbinary, and intersex people, and reduce cis people to stereotypical view. Thus, cannot produce fair NLP.

We observe harms of allocation when systems distribute resources (material and non-) in a way that is unfair towards subordinated groups. For example: a website shows STEM job advertisements to men, but not women with the same qualifications/profiles.

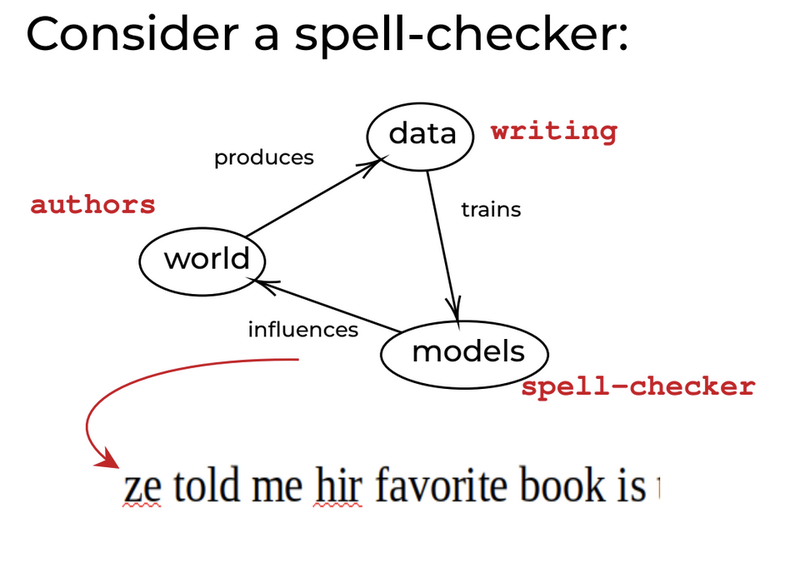

We observe harms of representation when systems reinforce the subordination of already-disadvantaged groups. For example: names associated with Black Americans are given less positive sentiment scores than white names. Or, when the system reinforces the stereotypes which influence people’s biases leading to more bias. For example: In the following figure we can see that biased data produces biased models which influence the world which produces biased data. Hence, the cycle of bias continues.

Hannah gave Adlede an example of a spell checker where gender-neutral pronoun “ze” was not included.

The summed-up takeaway from this lecture is that we should be clear and critical about our (working) definitions of our data. For example: to be careful about if the data is biased, or how the data has been collected.

Since we live in a normative society we may not have completely unbiased dataset therefore these are the following 2 approaches (can be blended) that can be taken to mitigate bias in NLP:

By Kabir Fahria (They/them or hen)

Developer, Adlede